Three years ago, getting a robot to reliably pick up objects required armies of engineers: hardware specialists designing custom grippers, computer vision teams training object detection models, control theorists tuning PID loops, and mechanical engineers iterating on actuator designs. Today, a college student can download an open-source vision-language-action model, fine-tune it on a weekend1, and achieve results that would have taken industry teams months to accomplish.

This dramatic shift in what's possible got me asking the question that's echoing among researchers and investors alike: Are we nearing the field's long-awaited "ChatGPT moment"?

My thesis: we're not quite there yet, but the pieces are falling into place. With the release of π-0.5 model2 by Physical Intelligence, we may be at the GPT-3 stage of robotics. Models are beginning to reason across modalities, adapt in real time, and take meaningful actions in the physical world. What’s needed now is rapid hardware & model iteration, robust data collection infrastructure, and the ability to safely deploy and learn in the real world.

Over the past several months, I’ve immersed myself in this fast-evolving world — speaking with founders, engineers, investors, and customers at the cutting edge. This piece is my field report: what does a ChatGPT moment look like, where are we today, what’s different now, what’s next, and finally, my take on winners.

What does a “ChatGPT moment” look like?

The true “wow” moment will feel almost surreal, just like the first time many of us typed a question into ChatGPT and got back a shockingly good answer. In robotics, I picture two scenes that will make it real for everyone:

The blue-collar colleague

A new robot rolls onto the floor, watches an experienced worker for a couple of shifts, asks a handful of clarifying questions (“Do these fragile SKUs need special handling?”), and by day three is quietly taking over the dull, heavy lifts—freeing its human coworker for higher-value tasks.

The invisible home helper

While you’re at work or asleep, a tireless home bot glides through the house. It tidies rooms, loads laundry, and maybe even helps out in the kitchen. You notice not because it boasts flashy tricks, but because you stop thinking about everyday chores.

When moments like these happen without a special demo, just in normal life, that’s when robotics will have its ChatGPT-level debut—and we’ll all feel the ground shift under our feet.

Where are we today?

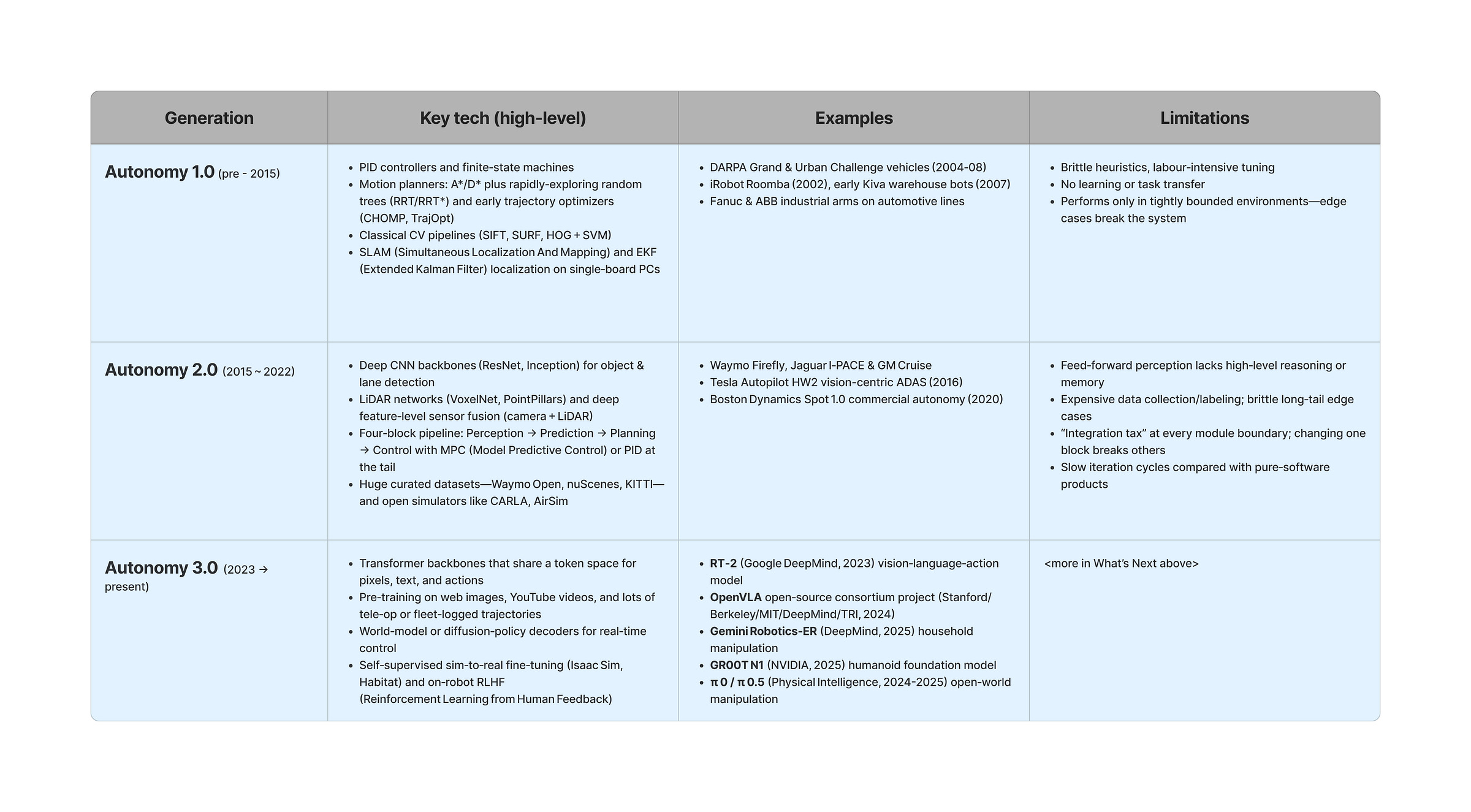

Why the sudden surge of excitement in robotics? A useful lens is to map autonomy (i.e. the ability to perceive, act and adapt over time) into generations — borrowing from Andrej Karpathy’s Software ‑ 1.0, 2.03, 3.04 framing. Think of them as snapshots of the dominant recipe for building real‑world autonomy:

Autonomy 1.0 — Hand‑Crafted Robotics (pre‑2015)

Rule-based control • classical vision • zero learning

Robots ran on hand-tuned heuristics and feature-based vision; every behavior was hard-coded. Examples include DARPA Grand Challenge cars, iRobot Roomba vacuums, and early Kiva warehouse bots—great in tightly scripted settings but unreliable in the wild.

Autonomy 2.0 — Modular Deep‑Learning Stack (≈ 2015–2022)

CNN perception • rule-based planner • low-level control

Deep CNNs took over vision, but planning and control stayed rule-based. Waymo Firefly5, Tesla Autopilot HW26, and Boston Dynamics Spot 1.07 show the pattern: a “Perception → Prediction → Planning → Control” pipeline that works—until edge cases expose the seams.

Autonomy 3.0 — Foundation‑Model Autonomy (2023 → present)

Vision-Language-Action Transformers trained at web + fleet scale

One Transformer ingests video, text, and sensor data, then outputs actions—fine-tuned across tasks. Google’s RT-28, NVIDIA’s GR00T N19, OpenVLA10 and Physical Intelligence’s π 0.5 hint at robots that can learn new jobs in days and explain their choices in plain English.

Autonomy 4.0 — Collaborative Autonomy (future)

Fleet-level hive mind • Instinctive human hand-offs

Looking beyond Autonomy 3.0, I envision Autonomy 4.0 where intelligent robots collaborate with each other, with humans, and with dumb-bots effortlessly. They sync to a shared world map, divvy up work on the fly11, and read human intent like a teammate: swarms of forklifts reshuffle a warehouse mid-shift, drone crews reroute around storms in real time, and a home helper hands you the drill just as you reach for it.

For more details on each generation, see the table in the appendix.

What’s different now?

The breakthroughs in Autonomy 3.0 explain the flood of capital and talent into robotics start‑ups—from construction bots to warehouse pickers to humanoid home assistants.

Unified, end‑to‑end learning stack — One transformer backbone replaces half‑a‑dozen hand‑off points, slashing integration effort and letting the entire system learn as a whole.

Open‑vocabulary, multimodal perception and reasoning — Robots can follow plain‑English commands, recognize brand‑new objects by name or description, and plan before acting.

Rapid task adaptation — A single pretrained model on internet-scale data fine‑tunes to forklifts, drones, or humanoids in days, not months, because most of the “world knowledge” is already baked in.

Self‑reinforcing data flywheel — Every deployed unit streams rare failures and tele‑op demos back to the cloud; nightly offline RL pushes fresh weights, so the fleet improves while you sleep.

Several startups are at the forefront of these advancements, pushing the boundaries of what's possible in robotics and more generally in Embodied AI:

Fullstack humanoids: Tesla Optimus, Figure AI, 1X, Agility Robotics, Boston Dynamics, Zhiyuan Robotics (CN), Apptronik, Sanctuary AI, The Bot Company, Genesis, Fauna Robotics, K-Scale, Unitree (CN), UBTech (CN)

Universal robot intelligence & platforms: Physical Intelligence, Skild AI, Gemini Robotics, Hugging Face LeRobot, Reimagine Robotics

Mobile manipulators & AMRs: Amazon Robotics, Dexterity, Collaborative Robotics, Keenon (CN)

Autonomous vehicles: Wayve AI, Tesla FSD, Waymo

Defense autonomy: Shield AI, Anduril, Blue Water Autonomy, Scout AI

…and many more.

What’s next?

A truly general‑purpose robot—able to move from a warehouse to a kitchen to a construction site with minimal re‑training—remains a decade out. Below is a non-exhaustive list of areas across intelligence, hardware, operations, and safety where the field faces challenges, along with the milestones needed to credibly claim 'Autonomy 3.0'.

1. Better cognition & memory

Gap: Models still can't do long-horizon reasoning or keep track of the world state that's out of sight 1213 .

Milestones to unlock: Models that can keep goals, sub-goals and world state in working memory for minutes, not seconds. Additionally, robots that can build and query a 4-D map that survives power cycles.

2. Real-world learning

Gap: Current models struggle to adapt to the real world.

Milestone to unlock: A combination of on-the-job real-world imitation learning and Reinforcement Learning. Show the robot what to do and have it follow and learn by trial and error directly in the real world14151617.

3. Efficient edge inference

Gap: Current models struggle to balance accuracy with speed & power consumption18.

Milestone to unlock: Backbones that fuse video, world-model, and action in one pass while staying lean, balancing accuracy against power requirement and latency.

4. High bandwidth data collection

Gap: Collecting and labeling real-world robot experience is slow and expensive. Further, large industrial robotics companies like FANUC, ABB and others are fragmented through the market which makes industry-wide data consolidation virtually impossible.

Milestone to unlock: High-fidelity, tele-op tools that stream hours of annotated experience each shift and add domain randomization & adaptation. For related work, see xdof.

5. Simulation that matches reality - bridging the sim2real gap

Gap: Behaviors that work in simulation often stumble on hardware.

Milestone to unlock: Photorealistic renderers are good but we need physics engines that can simulate contact physics so a skill learned virtually lands <10 % of real-world performance. For related work, see Genesis and ZeroMatter.

6. Hardware at smartphone speed and price

Gap: Joints, sensors, and batteries improve in multi-year cycles and cost too much. Current battery packs cap runtime at 2-4 hours, preventing shift-long operations.

Milestone to unlock: High-torque / low-ratio actuators costing a few hundred dollars and batteries with higher density19 are required for broad adoption. Hardware, in general, requires significantly faster iteration cycles - mirroring the smartphone world.

7. Contact‑rich manipulation

Gap: Manipulation not adequate for very delicate tasks.

Milestone to unlock: Sub-millimeter accuracy and sub-Newton force control with sub-millisecond latency “sense-and-touch” loops that let robots perform highly dexterous and delicate tasks. For related work, see Tacta Systems.

8. Always-on safety rails

Gap: Formal guarantees that robots won’t harm people & infrastructure are missing.20

Milestone to unlock: Deterministic, provable 'safety rails' that wrap any learned policy and guarantee safe forces, speeds, and workspaces. For related work, see Saphira AI.

If even a few of these click in the next few years, Autonomy 3.0 will be within reach.

Who wins — my prediction

Many teams sense a Software 3.0 moment for the physical world; the next five years will show which Autonomy 3.0 pioneers become unicorns—and which simply leave a rich data trail for faster learners.

Immediate customer value wins the sprint. The most durable companies start with a painful, cash‑burning workflow—say, pallet unloading or mail sorting—and deliver a robot that delivers value on day one. They live on the customer’s floor, gather data side‑by‑side with operators, and refine until reliability beats novelty. I've seen this first-hand, applying AI to the real world. The physical world is messy, and it requires being extremely close to the domain and the customer to deliver an end-to-end solution that is highly reliable. The first Autonomy 3.0 company to reach multi-billion dollars in recurring revenue will likely master a single workflow and expand from that beachhead, much like Intuitive Surgical did21, but for the AI era.

Moonshot humanoids carry the highest execution risk. Aiming straight for a do‑anything humanoid demands huge capital, a decade‑long runway, and heroic advances in cost, safety, and regulation. They could change the world—or run out of time and cash first. Tesla Optimus is well-positioned due to its strong combination of hardware, software, manufacturing, supply chain, and capital advantages. Outside of that, I'm bearish on most other humanoid plays. This is a high-risk, infinite-reward strategy, and very few will survive.

The real dark horse: Chinese robotics22. Silicon Valley still holds an edge in embodied AI, but China is closing in fast—and is already miles ahead in hardware. Sanctions on compute can only hold them back for so long. Chinese factories crank out actuators, sensors, and complete units at prices U.S. start-ups just can’t compete with. Until those cost lines converge, Chinese robotics companies could steamroll Western competitors.

Despite who wins, to be surrounded by robots in everyday settings will feel surreal momentarily, until it’s not. Soon enough, we will rely on them just like we do with our AI assistants for the most basic needs of our lives. When folding clothes or hauling pallets becomes “just what the robot does,” we’ll know the future has quietly arrived.

Appendix

Thanks to dozens of founders, investors, engineers and customers for their valuable time and thanks to Maulesh, Seema and Parshard for their review.

Brohan et al., RT-2: Vision-Language-Action Models Transfer Web Knowledge to Robotic Control, arXiv 2307.15818, Jul 2023

GR00T N1: An Open Foundation Model for Generalist Humanoid Robots, arXiv:2503.14734, Mar 2025

Kim et al., OpenVLA: An Open-Source Vision-Language-Action Model, arXiv 2406.09246, June 2024

RLIF: Interactive Imitation Learning as Reinforcement Learning, arXiv:2311.12996

Interactive Imitation Learning in Robotics: A Survey, arXiv:2211.00600

Optimizing Edge AI: A Comprehensive Survey on Data, Model, and System Strategies, arXiv:2501.03265, Jan 2025

Zhiyuan Robotics, a 2-year-old startup, is pursuing a backdoor listing.